Stop Testing the Cake: Why A/B Tests Alone Won’t Help You Learn

“Let’s do a split test to see whether this works. That’s how we’ll figure out if it’s a good idea or not.”

I’ve heard that sentence more times than I can count. And sure, with modern web tools, it’s easy to run an A/B test to decide which wording, color, or layout works better. Sometimes, that’s useful.

But this time, something didn’t sit right.

I had just joined a new team. They were building an AI chatbot to improve customer support. Like many others at the time. Until then, the company had a confusing contact interface built on a decision tree. It rarely helped people find what they needed.

The move to a chatbot made total sense. It wasn’t a risky bet. We weren’t the first to try it. In fact, it seemed like the safe choice. We had the content, the tech, and the motivation to launch.

Once the chatbot was nearly ready, the team started a discussion about its placement on the customer service page. Where should the chatbot entry sit? What should it look like?

My instinct was simple: place it somewhere visible and go live. See what happens. Learn and iterate. But I stayed quiet. I was curious how the team would approach it.

They proposed building four different versions of the chatbot entry, placing them in different spots on the page, and A/B testing them all.

I was surprised. Confused, even. Why build four versions before we had any real usage data? Why not test our assumptions before building?

I asked, gently: “What other ways could we explore this? Are there lighter ways to learn where customers expect the chatbot to be?”

There was a pause. Then someone said: “We only ever do A/B tests here. That’s the norm.”

What’s really happening here: Testing the cake, not the batter

In that moment, I realised that we weren’t testing a hypothesis. We were testing guesses. Fully built. Four times over.

Over time, I’ve seen many teams having the illusion of discovery because they are comparing options. But they are not really learning. They are testing decisions rather than assumptions.

And I get it. Sometimes it is useful. Especially when we can do very easy tests to compare. We’d be fools to not do that if we can set up an A/B test in five minutes and see what works best. But when this is our only tool for learning, we are not really learning.

Because we are testing decisions, we are not thinking about what we want to learn. There is no hypothesis. There is no explicit assumption. We just want to validate our decision. Not learn about it. In other words: we are testing the cake, not the batter.

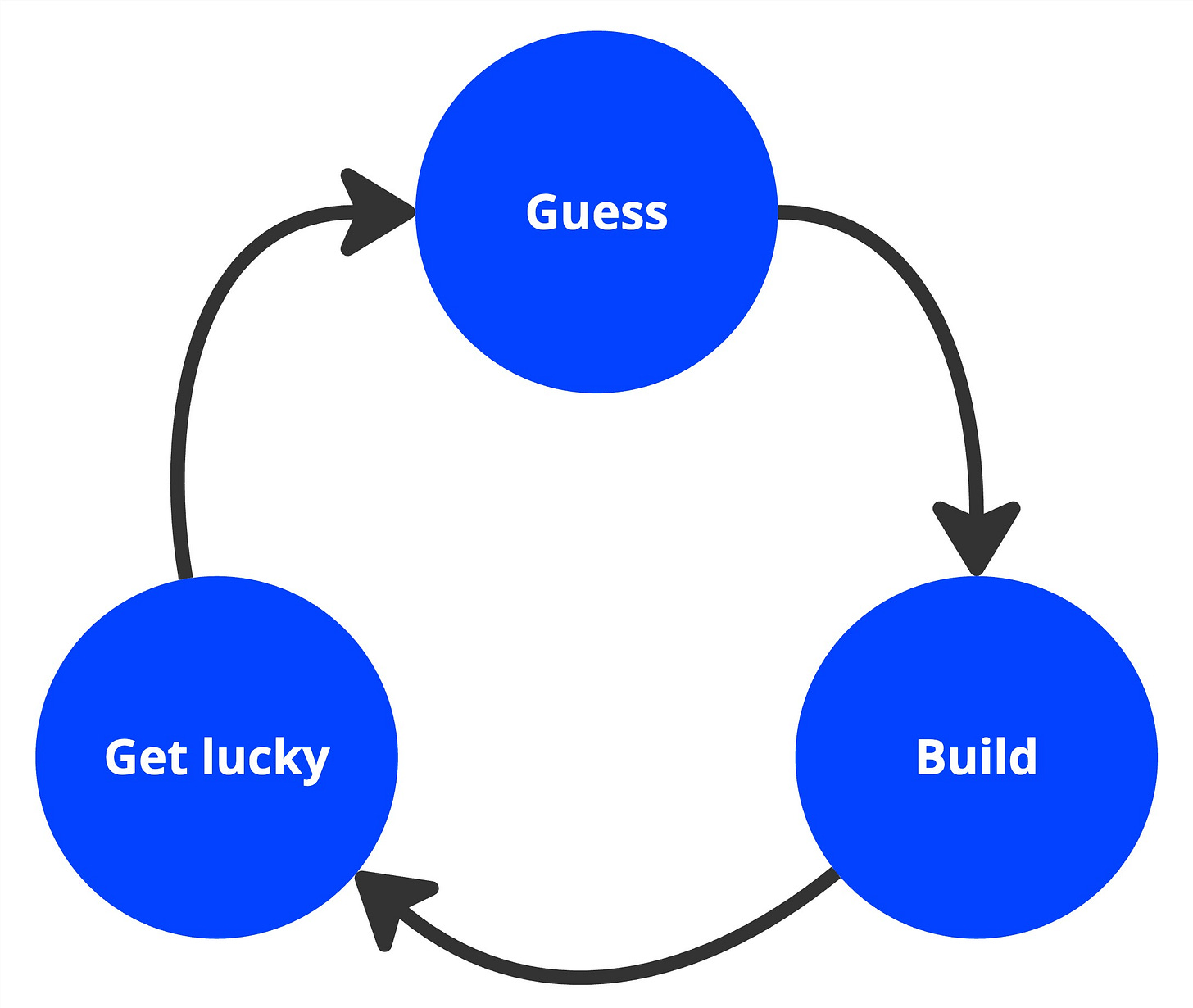

Essentially what we are doing then is just:

And that’s not discovery. That’s gambling. Expensive gambling.

Why we fall into this trap

I’m not here to blame teams that do this. It’s not about poor judgment. Most teams do the best they can with what they know. But often they’re stuck in the system. Everyone around them works this way. It becomes the norm. Let's break it down.

Psychological safety

By now, I’ve learned that teams don’t always feel safe to speak up when they don’t agree. Engineers might not agree with the approach. They usually won’t like to build multiple versions of the same feature. But it feels safer to comply and just do it.

Another perspective is that testing something that’s fully polished feels way safer. Teams might feel awkward testing with wireframes or drawings, or just something that’s “not fully done.” There might be feelings of insecurity or embarrassment.

Planning culture

Usually, there’s a delivery roadmap. There are ideas on there with untested assumptions. These ideas have been presented to some board. This board has given a thumbs-up. And now these ideas need to come to reality, preferably on time, on budget.

Teams want to deliver what has been “promised.” And building things falls into the illusion of progress. Even if building more might be redundant, as long as we are moving, others will think we’re making progress. And testing a full implementation fits better with this idea.

Tools make it easy

There are many tools right now that help us do experiments online. Usually, companies have already set this up. It’s enabled for all teams. It’s available and easy to use. Because of this, we usually only think about such tools.

In cognitive psychology, we call this the availability heuristic. It’s a mental shortcut where we think of something that’s easily available to us. This can be influenced by how recent something is, or how much it’s around us.

Discovery literacy

If we only think that A/B tests are the only way of learning, there might be a bigger issue. Even if we are super proud of our A/B test results, this is probably a symptom of something else: discovery literacy. We don’t know enough about discovery.

How can we know what else to do, when we haven’t learned about it? It’s a fair question. And it’s no one’s fault. But it’s up to us to help our teams and stakeholders in learning that there are better ways. Maybe just by showing first. Make it small. Usually, when we start there, people start noticing and becoming curious.

What to do instead

There are many ways of doing discovery. When I started, I got overwhelmed by all the available information. And it really took a while before I got comfortable with it. Especially when it comes to assumption testing.

“I have this idea. But how do I break it down? I just don’t see it.”

After a while of having all these thoughts, struggles and, most of all, study and practice, I started working more methodically.

Ask: What do we actually want to learn?

Asking this question might feel very big. So we need to break down our ideas to understand what we want to learn. How do we do this?

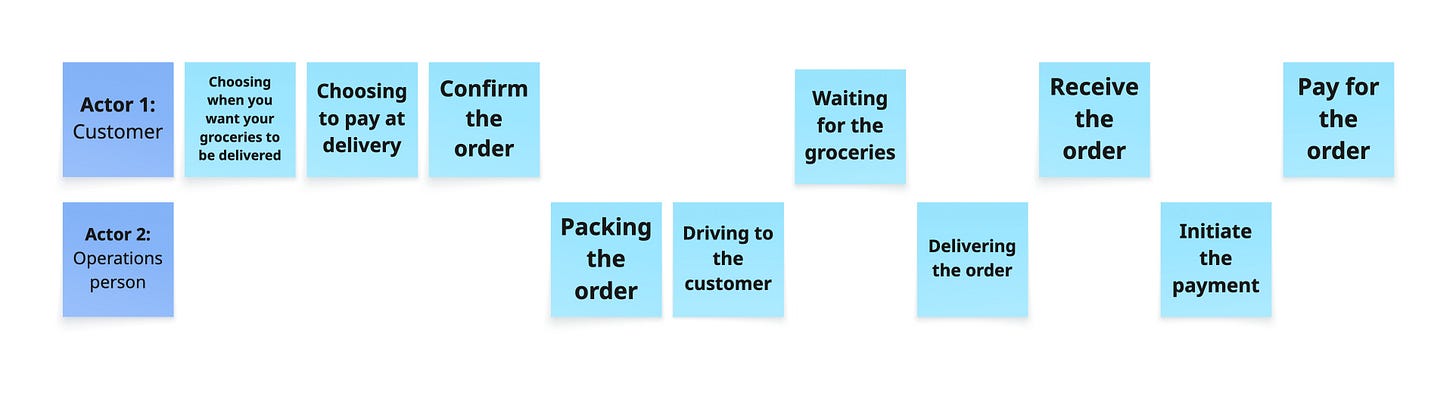

To learn how to do this, let’s look at a fictive example that I use when I give training about this: Adding pay at delivery for online groceries.

First, we need to understand the steps of our idea. To make this easy, I’d recommend making a story map.

Looking at this story map, I would also think that each step might be done by different people. This is where I would think about actors—the people who would be doing those steps. Here we might have a customer, and some operational person from the online groceries company.

Usually, when thinking about actors, we might identify steps in our solution that we didn’t think of before. In this case, for a customer it’s waiting for the groceries.

The next step is to map out our assumptions per step. I’ve been calling this a story-assumption map. Assumptions are, here, things that need to be true for our solution to work. These usually differ per context. And there can be many different things we think we know.

I usually categorise my assumptions based on the four big risks as described by Marty Cagan:

Value assumptions: Will our users or customers be willing to use, buy, or spend effort in doing this?

Usability assumptions: Will our users or customers understand this easily?

Feasibility assumptions: Are we able to build this given the people, technology and time?

Viability assumptions: Are we able to support this solution as a business? (Are we making money, able to go to market, align departments, etc.)

You don’t have to do this in your story-assumption map. But I’ve learnt that it usually helps me in the next few steps.

Something to take into account here is how we phrase our assumptions. I’ve seen many things. Questions (will our customers buy this?), not statements (customers won’t leave the page), etc. But it’s really important that we phrase them in a positive phrasing. For example: Customers will select pay at delivery.

So, we have our assumptions. And we want to learn about these before we start building. Because if most of them are untrue, then there’s no point in making that specific feature. But does that mean that we need to test all of them?

No. That would be a waste. Remember: we want to learn quickly if we want to build this.

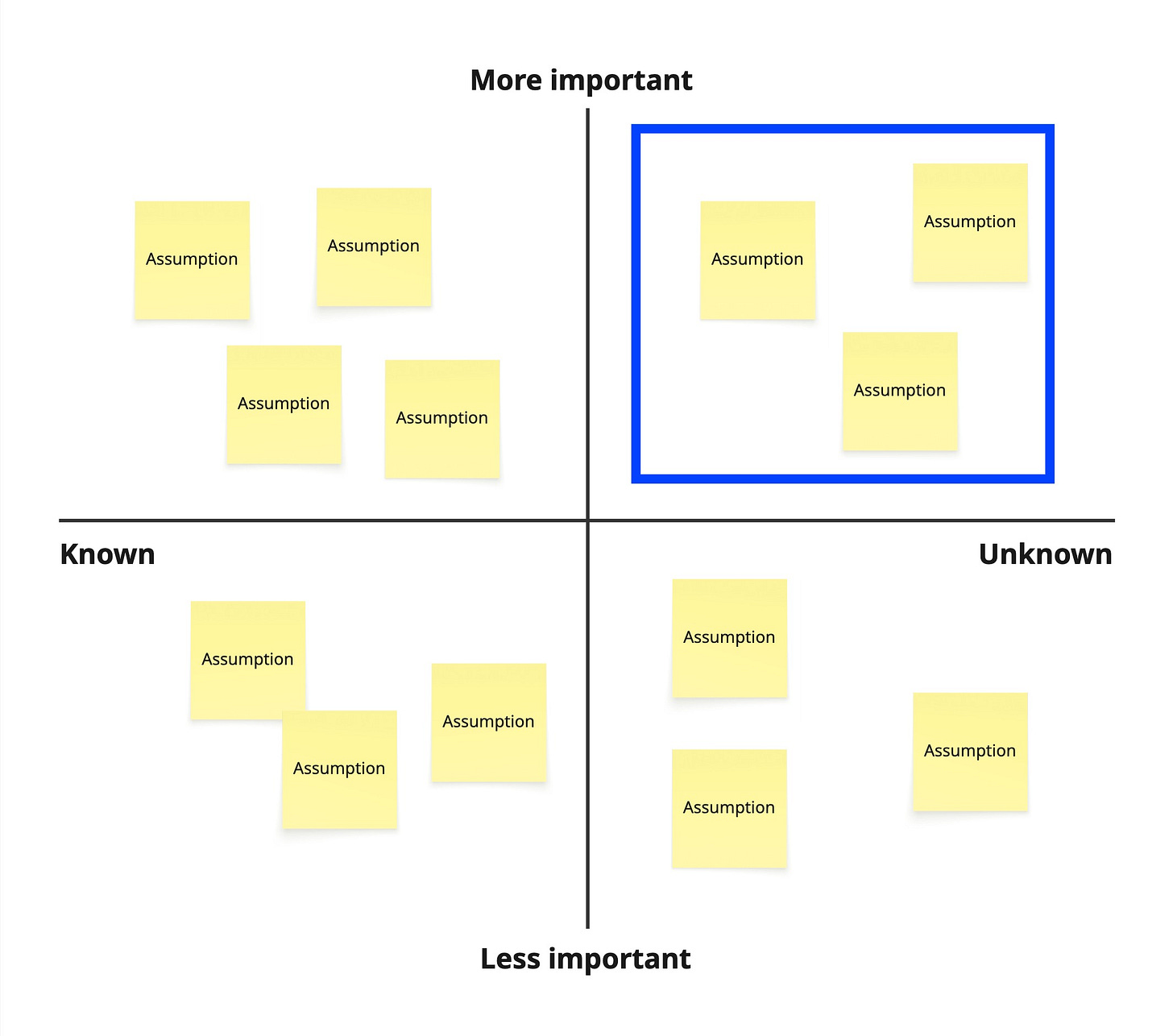

We need to identify our riskiest assumptions. There are many ways of doing this. I would usually use assumption mapping for this. I would map my assumptions based on how important the assumption is for my solution to work. And also how much I know about this assumption. Because sometimes you already know. Others have done this before, or it’s the market standard. We don’t want to reinvent the wheel there.

In the right upper quadrant, we find our riskiest assumptions. These are the assumptions that are the most important for our solution to work, and the assumptions we know the least of.

But now we have mapped what we want to learn. So, when the question “what do we want to learn?” pops up, we are able to answer it.

Test: Choose a lighter, earlier test

We now want to test to see whether our assumptions are true. And we want to do this as quickly and cost-effective as possible. Here’s where I see people struggle. Because they don’t know how. And even when they know how, it might be overwhelming.

One thing that I’ve been working with in the past year: using ChatGPT for this. Before, my teams would usually struggle in coming up with hypotheses, tests and success metrics. Now, I would just explain to ChatGPT what I’m doing and ask it to come up with these things. I’d review its answers and then I’m ready to go.

If you don’t want to use AI at first, because you want to train your discovery muscle, you’d need to come up with these yourself.

Your hypothesis is basically a testable statement. For example: We expect that customers will click on pay at delivery.

Then we find how to test this. If we want to go very low effort, we could come up with a fake door test. Adding pay at delivery as an option for payment, but with no effect. Just a pop-up to explain that we are testing, or anything similar. There are many types of tests for different assumptions. Pawel Huryn has an extensive list of testing ideas.

After defining your tests, it’s important that we determine what the success criteria is for such a test. For example, X% of users clicks on link. This really depends on the type of tests and what you are trying to learn. Remember, your results will never be 100%. Nothing will be always true. But what’s true enough? How do we know that something is less risky?

Compare: Find your winning idea

I’ve seen teams stop after testing one solution. It left me wondering: how do we find the winning solution? If we only test one idea?

When giving training I also get these questions. I’d usually recommend testing multiple solutions for the same opportunity. This will help in finding what works best. There is never only one idea. There are many. And the assumptions behind those ideas deserve testing.

Usually these ideas have overlapping assumptions. That makes it easier to test. When they don’t, then you need to test different things. Remember, we need to do this quickly and cost-effectively. What can you do to make it such?

After you’ve done this testing, compare your ideas. Which one has the most assumptions that are true? Did you find your winner?

If not? You need to go back to the drawing board. If yes, great. You’ve de-risked how to solve for an opportunity.

Towards a smarter testing culture

Doing all this might feel uncomfortable at first. I’ve been there. But we shouldn’t give up. There are better ways than just A/B testing to learn. It starts by making discovery a habit—something your team does by default.

Encourage people to question the standard approach. Ask more questions. Model the behavior. And if you’re a leader, focus on creating an environment where this is possible.

Testing isn’t about statistical significance. It’s about meaningful signals. About learning fast. About reducing risk and creating space to figure things out. To try and fail, then go again.

The courage to test differently

So, that chatbot entry. What could we have done differently?

The real questions were:

Will this work better than the old contact interface? — We already knew the answer from market signals and internal motivation.

Will customers find the chatbot easily? — Assumption: Customers will spot and understand the entry. Test: Add a simple button on top of the page and monitor clicks.

I thought about this, but I didn’t say it. I held back. I had just started. I wanted to understand how they worked. But I also felt unsure.

What if my ideas were weird? What if they rejected everything I said?

That’s part of the issue too. We don’t just need better frameworks. We need environments where people feel safe to share better ways of working. Most people aren’t against discovery. They’re just afraid to look wrong or alone.

Still, every time I’ve seen someone suggest a smaller, faster, cheaper way to learn, the team appreciated it. Because it lowered the pressure. Because it made learning part of the process again.

So the next time you hear “let’s A/B test it,” pause for a moment.

Ask: “What do we actually want to learn?” Then: “What’s the smallest way we can learn that, without building the whole thing?”

That question alone might change how your team works.

Maybe not all at once. But one chatbot button at a time.